In this article we will walk through how to creation partitions using apache drill on data residing in operating system.

Many a times , we have data generated or received as files at a certain frequency i.e. daily , hourly etc and we want in effective way to query only a certain section of data without scanning all the files.

For our e.g , we will assume that we receive files every quarter within a year and or requirement is that when we query the data for a particular query , only that quarter file gets scanned and not all the files. The advantage of drill is that it doesn't generate and persist metadata before hand and generates the same on the fly.

Lets assume that our files resides in folder with a year and with year , folders for quarters are created and each quarterly folder has a file for that particular quarter. So our folder structure will look something like this:

<<BasePath>>/2016/Q1/file.csv

<<BasePath>>/2016/Q2/file.csv

<<BasePath>>/2016/Q3/file.csv

<<BasePath>>/2016/Q4/file.csv

<<BasePath>>/2017/Q1/file.csv

<<BasePath>>/2017/Q2/file.csv

<<BasePath>>/2017/Q3/file.csv

<<BasePath>>/2017/Q4/file.csv

Lets assume that our files resides in folder with a year and with year , folders for quarters are created and each quarterly folder has a file for that particular quarter. So our folder structure will look something like this:

<<BasePath>>/2016/Q1/file.csv

<<BasePath>>/2016/Q2/file.csv

<<BasePath>>/2016/Q3/file.csv

<<BasePath>>/2016/Q4/file.csv

<<BasePath>>/2017/Q1/file.csv

<<BasePath>>/2017/Q2/file.csv

<<BasePath>>/2017/Q3/file.csv

<<BasePath>>/2017/Q4/file.csv

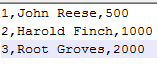

- Each of the file will have the sample data in the below format:

- Now we need to create a Apache drill view on top of this data till the base path i.e. <<BasePath>>

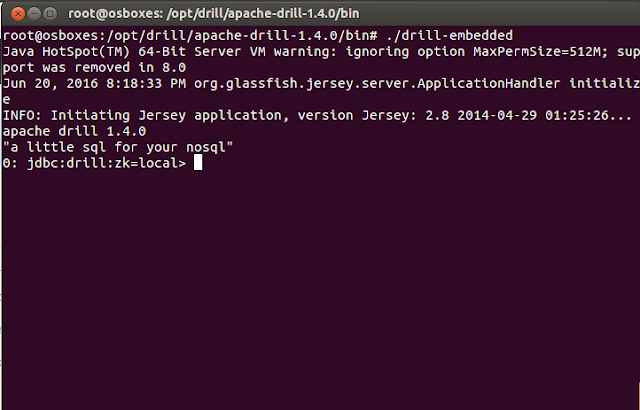

- Visit the <<DrillHome>> path log into the Apache Drill terminal console using below command: bin/sqlline -u jdbc:drill:schema=dfs;zk=<<IP:Port>>

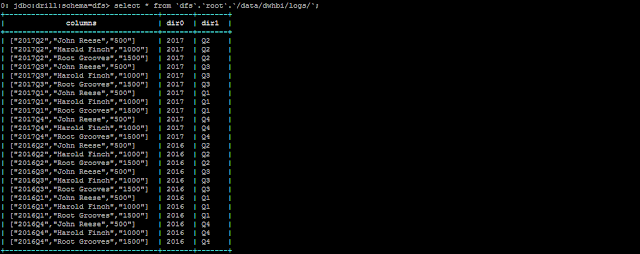

- Just run a query on the base path to ensure that the data is accessible. select * from `dfs`.`root`.`/data/dwhbi/logs/`;

- You would have observed above that the output of the above query gives dir0 and dir1 as 2 separate columns. Now we will create a view on top of this data using the generated columns dir0 and dir1.

- First set the workspace by executing the command use dfs.root;

- Now create a view by executing the below command. CREATE VIEW data_partition AS SELECT dir0 AS `Year`,dir1 AS `Qtr`,columns[0] As `Id`,columns[1] As `Name`,columns[2] AS `Sales` FROM `dfs`.`/data/dwhbi/logs/`;

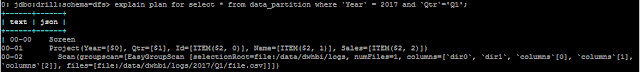

- Once the view is created , you can fire a query to fetch the relevant data for a year and quarter.

- We can view the plan to see how many files were scanned to fetch the data to ensure that the partition pruning happened.

- As seen above only the relevant file was scanned and not all the files.

- Do revert/comments if you have any observations.